Companies are going all-in on artificial intelligence right now, investing millions or even billions into the area while slapping the AI initialism on their products, even when doing so seems strange and pointless.

Heavy investment and increasingly powerful hardware tend to mean more expensive products. To discover if people would be willing to pay extra for hardware with AI capabilities, the question was asked on the TechPowerUp forums.

The results show that over 22,000 people, a massive 84% of the overall vote, said no, they would not pay more. More than 2,200 participants said they didn’t know, while just under 2,000 voters said yes.

AI for IT companies is looking more and more like 3D was for movie industry

All fanfare and overhype, a small handful of examples that do seem a solid step forward with millions others that are just a polished turd. Massive investment for something the market has not demanded

It’s just a gimmick, a new “feature” to justify higher product prices.

barely a feature, just a buzzword

People already aren’t paying for them, nVidia’s main source of income is industry use and not consumer parts, right now.

deleted by creator

40% of translators report having lost income due to it :0

deleted by creator

Pepper Pots levels of capability with local storage and I’ll just hand you my card.

someone tried to sell me a fucking AI fridge the other day. Why the fuck would I want my fridge to “learn my habits?” I don’t even like my phone “learning my habits!”

Why does a fridge need to know your habits?

It has to keep the food cold all the time. The light has to come on when you open the door.

What could it possibly be learning

Hi Zron, you seem to really enjoy eating shredded cheese at 2:00am! For your convenience, we’ve placed an order for 50lbs of shredded cheese based on your rate of consumption. Thanks!

We also took the liberty of canceling your health insurance to help protect the shareholders from your abhorrent health expenses in the far future

If your fridge spies after you, certain people can have better insights into healthiness of your food habits, how organized you are, how often things go bad and are thrown out, what medicine (requiring to be kept cold) do you put there and how often do you use it.

That will then affect your insurances, your credit rating, and possibly many other ratings other people are interested in.

I wish products followed your lead and had no AI features, 1995 Toyota Corolla :/

I think you’re being sarcastic, but I unironically agree. Cars and fridges can, and should stay dumb, with the notable exception of battery management systems in electric vehicles. That’s the single acceptable use case for a car IMHO.

I think car play is a wonderful feature. My car should absolutely allow syncing up to my phone. I don’t think it should telemetry or anything like that though. But I think internal process monitoring should also be a thing. Display error codes, show me that a tire is low, monitor a battery, etc. but the manufacturer shouldn’t get that info. My car shouldn’t know my sex life, and the manufacturer definitely shouldn’t

Oh I absolutely agree, some things don’t need to be “smart”.

Imagine if someone put a microchip in a potato peeler claiming that it would add features like “data loging the amount of pressure applied to the potato to ensure clean peels”. The reason why they won’t do that is that the data only benefits the user, and not the company’s profit margins.

So I can see what you like to eat, then it can tell your grocery store, then your grocery store can raise the prices on those items. That’s the point. It’s the same thing with those memberships and coupon apps. That’s the end goal.

They can see what you like to eat by what you’re buying, LOL. No, not this.

A fridge can give them information on how do you eat.

- Know when you’re about to put groceries in so it makes the fridge colder so the added heat doesn’t make things go bad.

- Know when you don’t use it and let it get a tiny bit warmer to save a teeny bit of power. (The vast majority of power is cooling new items, not keeping things cold though.)

- Tell you where things are?

- Ummm… Maybe give you an optimized layout of how to store things?

- Be an attack vector on your home’s wifi

- Wait, no, uh,

- Push notifications

- Do you not have phones?

To remind you when should go to buy groceries haha

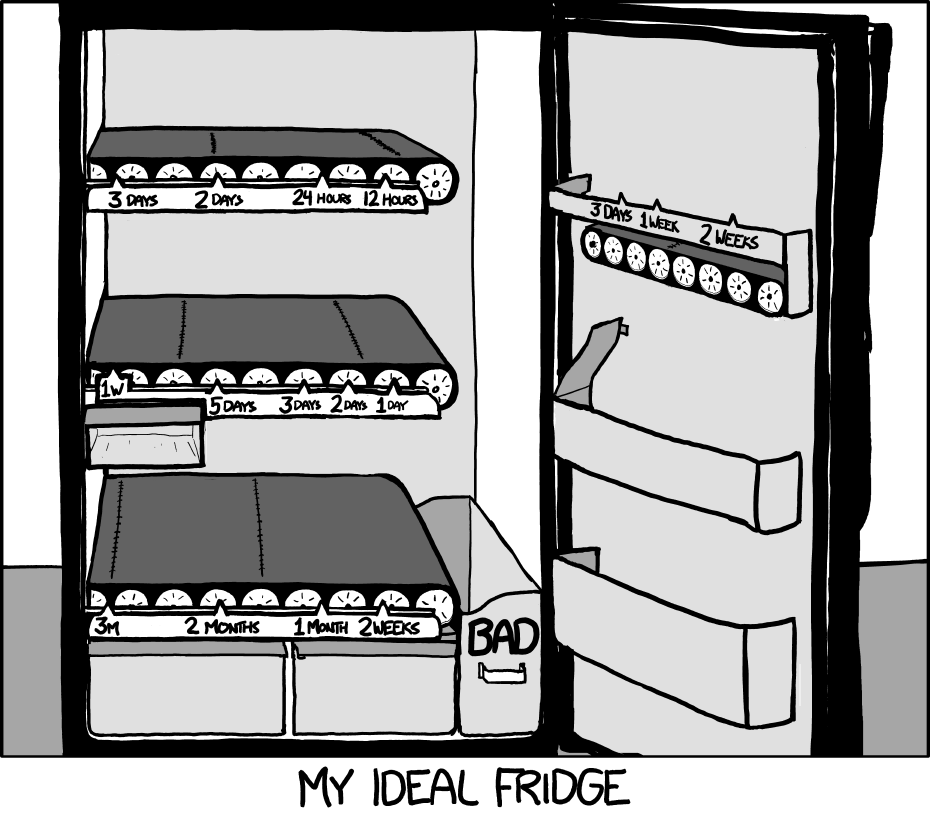

I still want this fridge. (Source)

Now THIS I could get behind! Still not AI though. it’s a very dumb timer system that would be very useful. 1950’s tech could do this!

it doesn’t seem all that hard to make, as long as you don’t mind the severely reduced flexibility in capacity and glass bottles shattering against each other at the bottom

Not to mention the increased expense, loudness, greater difficulty cleaning, and many more points of failure!

always xkcd

I’m still pissed about the fact that I can’t buy a reasonably priced TV that doesn’t have WiFi. I should never have left my old LG Plasma bolted to the wall of my previous house when I sold it. That thing had a fantastic picture and doubled as a space heater in the winter.

Projector gang checking in 🤓📽️

Everything alright here?

You can always join us in the peaceful realm of select input.

(there are still WiFi-free options)

what’s the affordable option for daytime viewing with the curtains open?

Audio description.

I want AI in my fridge for sure. Grocery shopping sucks. Forgetting how old something was sucks. Letting all the cool out to crawl around to see what I have sucks.

I want my fridge to be like the Sims, just get deliveries or pickup the order. Fill it out and get told what ingredients I have. Bonus points if you can just tell me what recipes I can cook right now, even better if I can ask for time frame.

That would be sick!

Still not going to give ecorp all of my data or put some half back internet of stings device on my WiFi for it. But it would be cool.

Would you be willing to destroy the whole planet in order make millions of these fridges?

Absolutely this. There IS a scenario in which I would love a “smart” or “AI” fridge, but it’s gotta be damn impressive to even be worth my time.

It needs to know everything in my fridge, how long it’s been there and it’s expiration date, and I want it to build grocery lists for me based on what is low, and let me know ahead of time that I should use something up that’s going bad soon. Bonus points if it recommends some options for how to do that based on my tastes. And I want to do this without having to manually input or remove everything.

But we’re still SO far from being able to do this reliably, let alone at any kind of acceptable price point, and yet fridge makers keep shoving out dumb fridges with a screen on them and calling them “smart”. I hate it.

For sure playing ads on my fridge or just spying on me aren’t “smart” at all to me.

Ye, that’d be sick! and that’s also not what was being sold! this fridge did none of that. What exactly made it “AI” I didn’t bother to find out, but I work in IT. I guarantee it wasn’t this. Also, not convinced I want my fridge to be able to spend my money for me. I want to be able to have a Ramen month if I need/want

Automatic spending definitely takes next level of trust for sure!

And it would improve your life zero. That is what is absurd about LLM’s in their current iteration, they provide almost no benefit to a vast majority of people.

All a learning model would do for a fridge is send you advertisements for whatever garbage food is on sale. Could it make recipes based on what you have? Tell it you want to slowly get healthier and have it assist with grocery selection?

Nah, fuck you and buy stuff.

Exactly, it’s entirely about extra monetization. They all think in terms of hype and money, never in terms of life improvement.

I’d actually love AI to control something like a home assistant setup by learning how I like things and predicting change (mind you I still need to get it set up at all). But most people don’t even want a smart home.

Make something that makes the unpleasant parts of life easier and people will be happy with it

“enhanced”

There’s really no point unless you work in specific fields that benefit from AI.

Meanwhile every large corpo tries to shove AI into every possible place they can. They’d introduce ChatGPT to your toilet seat if they could

Which would be approptiate, because with AI, theres nothing but shit in it.

Imagining a chatgpt toilet seat made me feel uncomfortable

Aw maaaaan. I thought you were going to link that youtube sketch I can’t find anymore. Hide and go poop.

Don’t worry, if Apple does it, it will sell a like fresh cookies world wide

Idk, they can’t even sell VR.

“Shits are frequently classified into three basic types…” and then gives 5 paragraphs of bland guff

With how much scraping of reddit they do, there’s no way it doesn’t try ordering a poop knife off of Amazon for you.

It’s seven types, actually, and it’s called the Bristol scale, after the Bristol Royal Infirmary where it was developed.

Someone did a demo recently of AI acceleration for 3d upscaling (think DLSS/AMDs equivilent) and it showed a nice boost in performance. It could be useful in the future.

I think it’s kind of a ray tracing. We don’t have a real use for it now, but eventually someone will figure out something that it’s actually good for and use it.

AI acceleration for 3d upscaling

Isn’t that not only similar to, but exactly what DLSS already is? A neural network that upscales games?

But instead of relying on the GPU to power it the dedicated AI chip did the work. Like it had it’s own distinct chip on the graphics card that would handle the upscaling.

I forget who demoed it, and searching for anything related to “AI” and “upscaling” gets buried with just what they’re already doing.

That’s already the nvidia approach, upscaling runs on the tensor cores.

And no it’s not something magical it’s just matrix math. AI workloads are lots of convolutions on gigantic, low-precision, floating point matrices. Low-precision because neural networks are robust against random perturbation and more rounding is exactly that, random perturbations, there’s no point in spending electricity and heat on high precision if it doesn’t make the output any better.

The kicker? Those tensor cores are less complicated than ordinary GPU cores. For general-purpose hardware and that also includes consumer-grade GPUs it’s way more sensible to make sure the ALUs can deal with 8-bit floats and leave everything else the same. That stuff is going to be standard by the next generation of even potatoes: Every SoC with an included GPU has enough oomph to sensibly run reasonable inference loads. And with “reasonable” I mean actually quite big, as far as I’m aware e.g. firefox’s inbuilt translation runs on the CPU, the models are small enough.

Nvidia OTOH is very much in the market for AI accelerators and figured it could corner the upscaling market and sell another new generation of cards by making their software rely on those cores even though it could run on the other cores. As AMD demonstrated, their stuff also runs on nvidia hardware.

What’s actually special sauce in that area are the RT cores, that is, accelerators for ray casting though BSP trees. That’s indeed specialised hardware but those things are nowhere near fast enough to compute enough rays for even remotely tolerable outputs which is where all that upscaling/denoising comes into play.

Nvidia’s tensor cores are inside the GPU, this was outside the GPU, but on the same card (the PCB looked like an abomination). If I remember right in total it used slightly less power, but performed about 30% faster than normal DLSS.

Found it.

I can’t find a picture of the PCB though, that might have been a leak pre reveal and now that it’s revealed good luck finding it.

Having to send full frames off of the GPU for extra processing has got to come with some extra latency/problems compared to just doing it actually on the gpu… and I’d be shocked if they have motion vectors and other engine stuff that DLSS has that would require the games to be specifically modified for this adaptation. IDK, but I don’t think we have enough details about this to really judge whether its useful or not, although I’m leaning on the side of ‘not’ for this particular implementation. They never showed any actual comparisons to dlss either.

As a side note, I found this other article on the same topic where they obviously didn’t know what they were talking about and mixed up frame rates and power consumption, its very entertaining to read

The NPU was able to lower the frame rate in Cyberpunk from 263.2 to 205.3, saving 22% on power consumption, and probably making fan noise less noticeable. In Final Fantasy, frame rates dropped from 338.6 to 262.9, resulting in a power saving of 22.4% according to PowerColor’s display. Power consumption also dropped considerably, as it shows Final Fantasy consuming 338W without the NPU, and 261W with it enabled.

I’ve been trying to find some better/original sources [1] [2] [3] and from what I can gather it’s even worse. It’s not even an upscaler of any kind, it apparently uses an NPU just to control clocks and fan speeds to reduce power draw, dropping FPS by ~10% in the process.

So yeah, I’m not really sure why they needed an NPU to figure out that running a GPU at its limit has always been wildly inefficient. Outside of getting that investor money of course.

Personally I would choose a processor with AI capabilities over a processor without, but I would not pay more for it

Any “ai” hardware you but today will be obsolete so fast it will make your dick bleed

it will just be not as fast as the newer stuff

it will just be

not as fasteven more slow as the newer stuff

Most people won’t pay for it because a lot of AI stuff is done cloud side. Even stuff that could be done locally is done in the cloud a lot. If that wasn’t possible, probably more people would wand the hardware. It makes more sense for corporations to invest in hardware.

deleted by creator

Depends on what kind of AI enhancement. If it’s just more things nobody needs and solves no problem, it’s a no brainer. But for computer graphics for example, DLSS is a feature people do appreciate, because it makes sense to apply AI there. Who doesn’t want faster and perhaps better graphics by using AI rather than brute forcing it, which also saves on electricity costs.

But that isn’t the kind of things most people on a survey would even think of since the benefit is readily apparent and doesn’t even need to be explicitly sold as “AI”. They’re most likely thinking of the kind of products where the manufacturer put an “AI powered” sticker on it because their stakeholders told them it would increase their sales, or it allowed them to overstate the value of a product.

Of course people are going to reject white collar scams if they think that’s what “AI enhanced” means. If legitimate use cases with clear advantages are produced, it will speak for itself and I don’t think people would be opposed. But obviously, there are a lot more companies that want to ride the AI wave than there are legitimate uses cases, so there will be quite some snake oil being sold.

well, i think a lot of these cpus come with a dedicated npu, idk if it would be more efficient than the tensor cores on an nvidia gpu for example though

edit: whatever npu they put in does have the advantage of being able to access your full cpu ram though, so I could see it might be kinda useful for things other than custom zoom background effects

But isn’t ram slower then a GPU’s vram? Last year people were complaining that suddenly local models were very slow on the same GPU, and it was found out it’s because a new nvidia driver automatically turned on a setting of letting the GPU dump everything on the ram if it filled up, which made people trying to run bigger models very annoyed since a crash would be preferable to try again with lower settings than the increased generation time a regular RAM added.

I just want good voice to text that runs on my own phone offline

FUTO Keyboard & voice input?

Fr, this one is great

They’ll pay for it. When the tech companies decide, it’s a thing to make money off & advertise it, all the good ants will buy, buy, buy and the rest of the time they will work, work, work for it.

Can’t help but think of it as a scheme to steal the consumers’ compute time and offload AI training to their hardware…

…just under 2,000 voters said “yes.”

And those people probably work in some area related to LLMs.

It’s practically a meme at this point:

Nobody:

Chip makers: People want us to add AI to our chips!

The even crazier part to me is some chip makers we were working with pulled out of guaranteed projects with reasonably decent revenue to chase AI instead

We had to redesign our boards and they paid us the penalties in our contract for not delivering so they could put more of their fab time towards AI

That’s absolutely crazy. Taking the Chicago School MBA philosophy to things as time consuming and expensive to setup as silicon production.

No, but I would pay good money for a freely programmable FPGA coprocessor.

If the AI chip is implemented as one, and is useful for other things I’m sold.

I think manufacturers need to get a lot more creative about simplified computing. The RPi Pico’s GPIO engine is powerful yet simple, and a good example of what is possible with some good application analysis and forethought.

Whichnoart of the pico are you referring to specifically? Never heard the term “GPIO engine” before. Is that sort of like the USB stack but for GPIO?

I think they meant PIO (programmable IO). It’s like a small processor tied to some of the IO pins. There’s a very small set of instructions and some state machines.

It can be used to implement your own IO protocols without worrying about the issues that come with bit-banging from the cpu.

Problem for the big market is that it’s hardly profitable. In fact make things too easily multipurpose and you undercut your specialized devices opportunities. Why buy a smart device for 500 dollars that requires a monthly subscription when you could get a 100 dollar device with a popular preload of a solution on it?

Like when the WRT54G came out in the day and OpenWRT basically drove Cisco to buy out Linksys to neuter the “home router” to stop it displacing expensive products in the business sector. The WRT54G was the best product for the market, but not the best product to exist for vendor profitablity.

I have few pi pico but i didn’t knew about it, can you please elaborate, because I’ve been using them just like any other esp32 stm32 esp8266 i have