Hi I have jellyfin installed in a VM with 24 cores and 32GiB RAM (VM also used for Docker). Whenever I attempt to play higher quality files, jellyfin crashes after a few minutes. I haven’t seen it struggle with lower quality media.

Here are some logs: FFmpeg.Transcode-2024-03-24_16-11-43_d48825174d455ae3ff859d8b28582853_ce3f3ebf.log upload_org.jellyfin.androidtv_0.16.7_20240324161053_d2befd034e424a3490e7ea55af1fe1f2.log Fmpeg.Transcode-2024-03-24_16-11-38_d48825174d455ae3ff859d8b28582853_dafa4555.log FFmpeg.DirectStream-2024-03-24_16-08-12_d48825174d455ae3ff859d8b28582853_dac7115f.log

I cannot for the life of me figure out whats wrong. I’ve tried disabling plugins, different clients, hard resets etc, but it still crashes.

Can someone enlighten me?? :(

It looks like your using nvidia for transcodes. Try disabling hardware acceleration temporarily to test if it is related.

It’s already off

I have this issue whenever I have to deal with transcoding. The stream will usually die after a few minutes of watching. I haven’t seen anything out of the ordinary in the logs. I’ve just resigned myself to lugging around the device I have that can direct stream the files.

Are you using software transcoding?

It’s supposed to be hardware transcoding with on an Intel cpu (using vaapi) . No idea if it’s actually working.

Check your CPU usage. If it is lower then it is working

This is pretty basic but have you checked how many cores and how much memory you have allocated to your VM?

It’s in the first line

I have jellyfin installed in a VM with 24 cores and 32GiB RAM

Oh. I thought that was for the whole server. In that case, I’m all out of ideas.

Sorry my bad, could have written it clearer

If the crashes are seemingly at random when transcoding I would suspect overheating hardware. Transcoding uses more energy and produces more heat than playing directly. If it were a software issue it would either work or not.

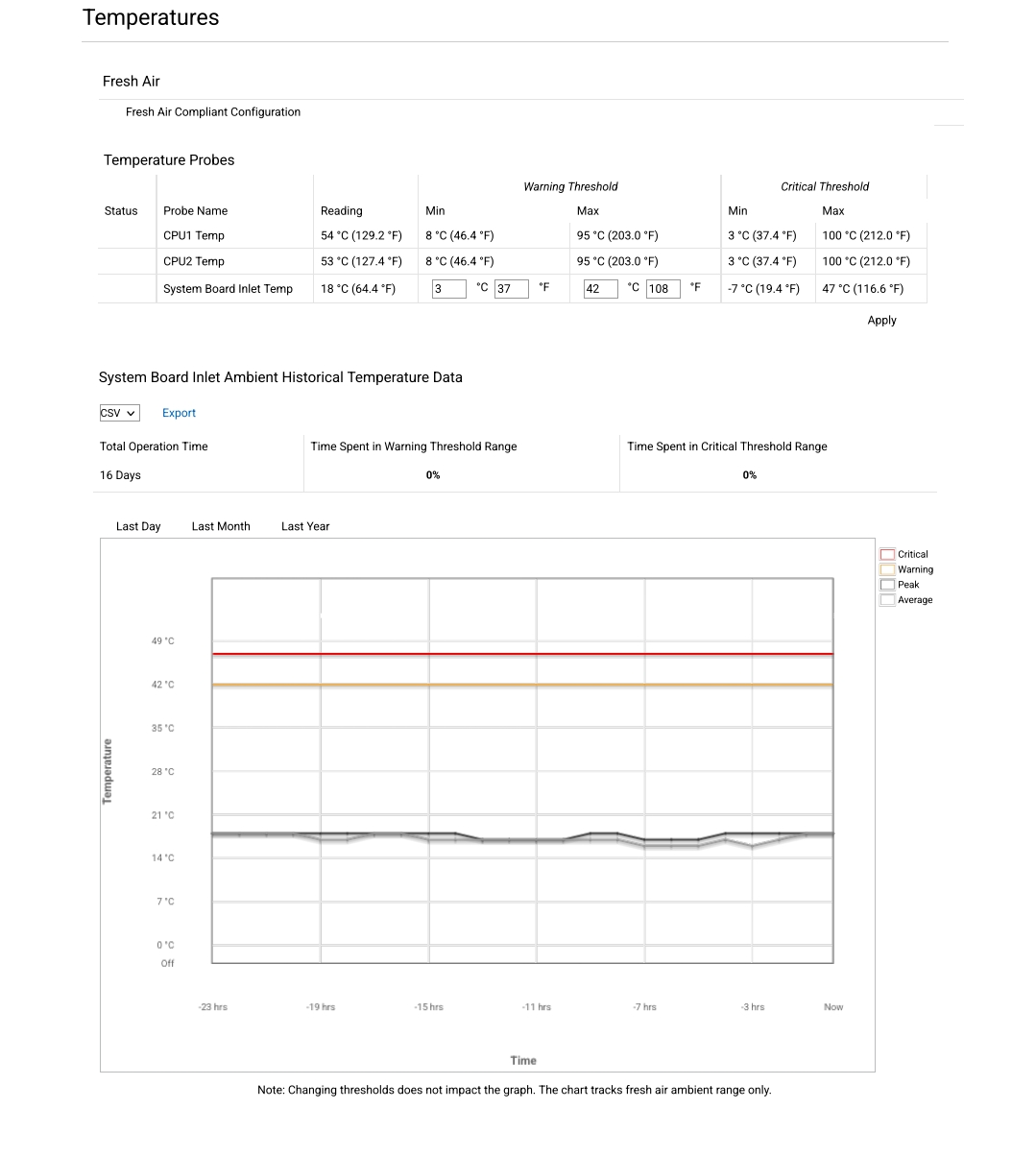

IDRAC logs show normal temps

I cant seem to open the second link, but what video driver version is running?

Pastebin wouldn’t accept it (said it was nsfw - maybe because of killing processes?) so I used paste.io, which I now see is blocked by adblocking. I am not using Hardware transcoding, I am unsure where to find the driver version.

Why in a vm? What’s that good for?

I have a couple of vms on my server - truenas, pihole, etc. One is for my media - arrs in docker containers and Jellyfin installed (not at container as it wasn’t binding to the media dir properly)

But why in a vm?

Why not in a VM? All my stuff runs in VM’s.

Doesn’t the vm produce an overhead? More power consumption and it can’t use the full capabilities of the machine?

There are many benefits to VMs. You can limit how much RAM is available to each one, so one app doesn’t eat all of your RAM. Same with CPU. Virtual Machines can be backed up, uploaded to remote storage, and restored. When it’s time to do a big update on your main machine (either changing OS or getting new hardware), restoring VM’s is super simple compared to the alternative.

You can limit them in docker as well

https://phoenixnap.com/kb/docker-memory-and-cpu-limit You can spin up the same container on another machine with one command.

Docker seems to be easier, not?

Docker is great, and I have it running in multiple VM’s. For me to restore everything without VM’s would be a little tedious.

I’d have to search for Docker install instructions, follow the instructions like importing keys, adding the repository, doing the post-install stuff (adding my user to the docker group), etc. Not a big deal, but it’s something.

Then I’d start copying data, making sure to keep the same folder structure so my compose files work. Then I start running all the commands to get all the containers back up and running (or in my case, creating 20+ stacks in Portainer).

This is all a bit tedious for me compared to opening the web interface for Proxmox, clicking on the backup (which is just “there” because it’s on a hard drive (or a ZFS pool in my case)), and clicking “restore”. Once restored it just boots up and my Docker stuff is good to go.

Can run multiple different os’s - I have truenas, windows server, Ubuntu server for docker, etc

You can limit how much RAM is available to each one, so one app doesn’t eat all of your RAM. Same with CPU.

This can be done with containers and you don’t get the overhead of virtualizing a whole operating system for every service/app you might be hosting.

Virtual Machines can be backed up, uploaded to remote storage, and restored.

This can also be done with containers in a more elegant way as there’s no need to back up any VM/OS data.

E.g. I have a docker compose file that can nearly immediately stand up a container with the right settings/image, point it to my restored data and be up and running in no time. The best part is i don’t need to back up the container/OS because that data is irrelevant.

When it’s time to do a big update on your main machine (either changing OS or getting new hardware), restoring VM’s is super simple compared to the alternative.

With the alternative you just restore your data and run

docker-compose up -d. Docker will handle the process of building, starting and managing the service.Simple example: Your minecraft server died but you have backups. You just restore the data to

/docker/minecraft. Then (to keep things really simple) you just run:docker run -d -p 25565:25565 --name minecraft -e EULA=TRUE -v /docker/minecraft:/data itzg/minecraft-serverand in a few minutes your server is ready to go.

If that’s the way you’d prefer to do it, I highly recommend taking that approach.

VMs can also do something called https://en.wikipedia.org/wiki/Kernel_same-page_merging - meaning a single machine could run something like 52 instances of Windows XP on 1gb of RAM

So for example, say I needed to run a bunch of ARK servers - I could put them all in VMs, and since they’re all loading mostly the same assets, I could run a lot of them in the very tiny amount of memory.

Thats crazy

Why do you guys do it?

I run it in a VM just like everything else. I use ePCI passthough for hardware

Are you using hardware decoding?

No

There’s a couple things that stick out to me in these logs:

-

the androidtv client logs show the crash was due to a time-out while waiting for the server to respond to a request for over 3 seconds.

-

you included a DirectStream log, that’s usually not too hard for even a Raspberry Pi to handle reasonably well

-

the first log I looked at showed libx264 was using 36 threads

So my guess is that, due to video encoding not scaling all that well across larger numbers of threads, your server is being bogged down with this transcoding and isn’t providing http responses fast enough to your client device(s).

A simple way to troubleshoot this would be to explicitly set the transcoding thread count to something a bit lower than what your server has, say 16 or 20, and see if that does any better.

An obvious potential fix would be to use hardware acceleration if it’s available to you. I run my Jellyfin server off of a little N100 mini PC and it can transcode 4K HDR 70 Mbps video (tonemapping included) at about 45 fps due to the hw acceleration. That said, I know it can be tricky to set up in a VM and you may not have the HW accel capability in your server CPU anyhow.

Wow, I never considered that too many cores may be the issue. I will for sure try that.

I believe my cpu is compatible, but I believe this is not iGPU transcoding. Pretty sure my server does have an iGPU (although I’ve never actually plugged in a screen so I can’t be sure). I will research passing through either of those.

I also read that it’s possible to use a different server for just transcoding. I have an old laptop I’ve been considering setting for that, but it’s network speeds are shite.

@sammeeeeeee @entropicdrift not sure if Intel igpus are the same, but my ryzen 5700g igpu won’t transcoded unless when it boots it has a monitor plugged in and powered on…

Oh well that’s definitely not possible for me (unless idrac counts)

-