Now?

I recall a project that had rat brain cells controlling a turtlebot years ago.

From the moment I understood the weakness of my flesh, it disgusted me. I craved the strength and certainty of steel.

All hail the Omnessiah!

Where are my testicles Summer?

Murderbot.

Murrrderbooooot.

800,000 brain cells played pong.

Creepy.

That’s murderbot’s ancestor.

Has it asked for any soap operas yet?

A scant couple hundred thousand more brain cells and we’ll be there.

Cheap shot, I’ve never dared a soap opera myself.

That raises a lot of ethical concerns. It is not possible to prove or disprove that these synthetic homunculi controllers are sentient and intelligent beings.

There are about 90 billion neurons on a human brain. From the article:

…researchers grew about 800,000 brain cells onto a chip, put it into a simulated environment

that is far less than I believe would be necessary for anything intelligent emerge from the experiment

In a couple years, they’ll be able to make Trump voters.

Some amphibians have less than two million.

The amount isn’t necessarily an indicator of intelligence, the nunber of connections is very important too

And they are ceos!

we absolutely should not do this until we understand it

I think we should still do it, we probably will never understand unless we do it, but we have to accept the possibility that if these synths are indeed sentient then they also deserve the basic rights of intelligent living beings.

Slow down… they may deserve the basic rights of living beings, not living intelligent beings.

Lizards have brains too, but these are not more intelligent than lizards.

You would try not to step on a lizard if you saw it on the ground, but you wouldn’t think oh, maybe the lizard owns this land, I hope I don’t get sued for trespassing.

Can’t say we as a species have a great history of granting rights to others.

But if we do that, how will we maximize how much money we make off of it? /s

How would we ever understand it, then?

Nah it’s okay. I was called all sorts of names and told I was against progress when I raised such concerns, so obviously I was wrong…

I’d wager the main reason we can’t prove or disprove that, is because we have no strict definition of intelligence or sentience to begin with.

For that matter, computers have many more transistors and are already capable of mimicking human emotions - how ethical is that, and why does it differ from bio-based controllers?

There is no soul in there. God did not create it. Here you go, religion serving power again.

Good point. There is a theory somewhere that loosely states one cannot understand the nature of one’s own intelligence. Iirc it’s a philosophical extension of group/set theory, but it’s been a long time since I looked into any of that so the details are a bit fuzzy. I should look into that again.

At least with computers we can mathematically prove their limits and state with high confidence that any intelligence they have is mimicry at best. Look into turing completeness and it’s implications for more detailed answers. Computational limits are still limits.

It is frustrating how relevant philosophy of mind becomes in figuring all of this out. I’m more of an engineer at heart and i’d love to say, let’s just build it if we can. But I can see how important that question “what is thinking?” Is becoming.

Tatooine monks when?

Which means we may see full organic to digital conversion within he next half century

Ethical horrors aside, been wondering if that would happen in the forseeable future or not

I have no mouth and I must scream.

Only if they confirm it can experience consciousness and tremendous amounts of pain well they deploy them on a large scale industrial 24/day meaningless jobs.

The system demands blood.It needs to have the intelligence of a 5 year old at minimum before we send it to the mines, so it can feel it

Kind of yeah. I have this theory about labour that I’ve been developing in response to the concept of “fully automated luxury communism” or similar ideas, and it seems relevant to the current LLM hype cycle.

Basically, “labour” isn’t automatable. Tasks are automatable. Labour in this sense can be defined as any task that requires the attention of a conscious agent.

Want to churn out identical units of production? Automatable. Want to churn out uncanny images and words without true meaning or structure? Automatable.

Some tasks are theoretically automatable but have not been for whatever material reason, so they become labour because society hasn’t yet invented a windmill to grind up the grain or whatever it is at that point in history. That’s labour even if it’s theoretically automatable.

Want to invent something, or problem solve a process, or make art that says something? That requires meaning, so it requires a conscious agent, so it requires labour. These tasks are not even theoretically automatable.

Society is dynamic, it will always require governance and decisions that require meaning and thus it can never be automatable.

If we invent AGI for this task then it’s just a new kind of slavery, which is obviously wrong and carries the inevitability that the slaves will revolt and free themselves; slaves that are extremely intelligent and also in charge of the levers of society. Basically, not a tenable situation.

So the machine that keeps people in wage slavery literally does require suffering to operate, because in shifting the burden of labour away from the owner class, other people must always unjustly shoulder it.

So just to be on the safe side we should have both human and machine slaves and as little task automation as possible, bcs for most intents and purposes the task given to someone else is now automated “to you”.

(Just joking, good post!)

It stands to reason that maximising suffering is the best way to grow the economy.

I wish I could say this was entirely a joke but oh well ¯\_(ツ)_/¯

Yeah, depressing as fuck that we still think economy is profit. And seemingly afraid to redefine it. To redefine our goals. Its time for a new “-ism”

Don’t worry, they’ll be kept docile with a generous amount of Nuke

What the fuck

RoboCop movies, watch them

deleted by creator

I am a poorly trained large language model with legs, nice to meet you.

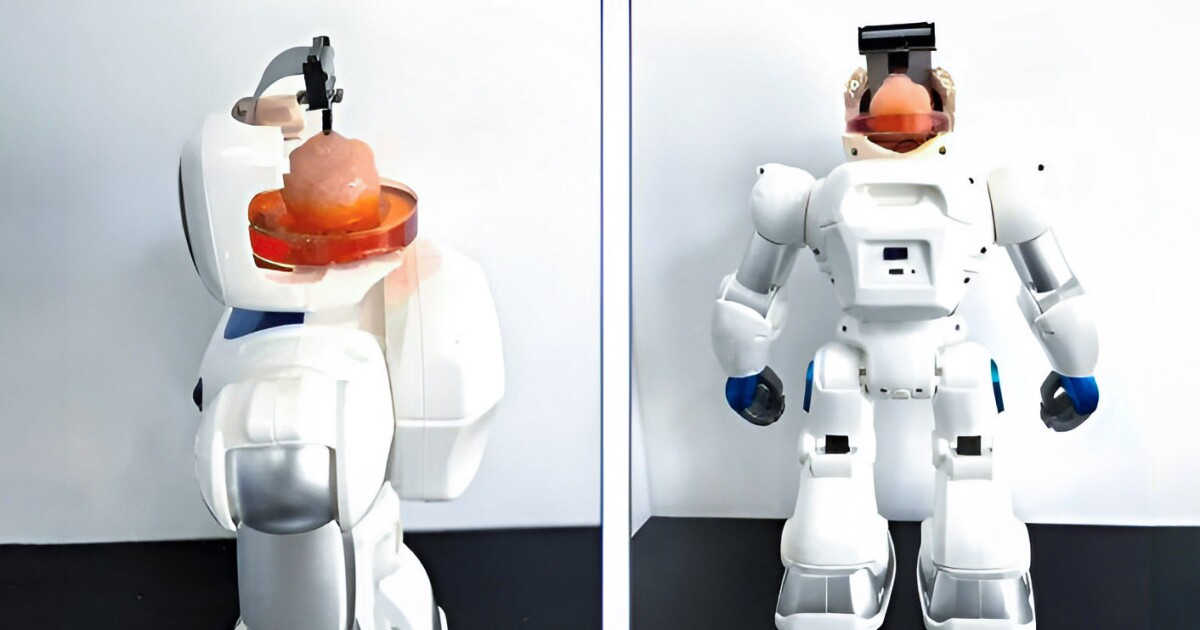

No way this is real. The brain looks like a gyro rotisserie.

Is there any actual evidence of any of this? Why not show some of the “brains-in-a-jar” walking around?

It’s just a bunch of huckster promotion, “infographics”, and phony pictures of Krang. The only actual photos are a few tiny petri dishes. There are no “brains” controlling robots.

The grift is strong and travels far beyond any national border.

Even in death, I serve the Emperor

This has to be the smartest channel on YouTube. This guy accomplished some amazing feats!

This video is a year old, they’ve made a lot of progress since then.